The Linux distribution I use is openSUSE 11.0 with a KDE 3.x shell, but the procedure below should work on any modern Linux distribution with either KDE or GNOME shell. As a matter of fact, I was reading posts from the Ubuntu forums while I was trying to enable it on my openSUSE. I was also able to reproduce the procedure on KDE 4.x.

Background: What Is What

Compiz and Compiz Fusion

Compiz is a low-level platform that enables the desktop effects we’ll be leveraging. Compiz Fusion is an upgrade on top of Compiz that implements a large variety of effects. While Compiz Fusion has merged into Compiz, the tools that manage them are still separate. I encourage you to read the Compiz wiki if you want to get the best out of your desktop.

Emerald

Emerald is a window decorator that works on top of Compiz Fusion. The Compiz wiki claims it is no longer supported, and Compiz Fusion itself should be used instead, but Emerald seems to be working better then the default Compiz’ default window decorator. Besides, the Mac themes I’ve found require Emerald.

KDE/GNOME

For everything else – dialog box controls, scroll bars, icons, etc., you still need your existing GUI shell to do the ground work.

Step 1: Enable Compiz Effects

Use the Distribution page on the Compiz wiki as a roadmap. It references the corresponding pages on the most popular distributions’ web sites that explain how to enable Compiz effects on each of those distributions. Following is my digestion of those procedures. Make sure you understand each step and perform it successfully.

Update your video driver

Before you start messing with graphics, make sure you have the latest driver for you graphics card. For openSUSE, there are one-click installs for both NVIDIA drivers and ATI drivers.

Install Compiz packages

Go to your software management tool. On openSUSE that is YaST. You’ll find it at: Start menu > Utilities > System > Administrator Settings. Within YaST click on Software > Software Management. Search for ‘compiz’. Read the descriptions and add all the packages that seem to be runtime our themes. Skip the ‘…-devel’ packages.

Enable Compiz effects

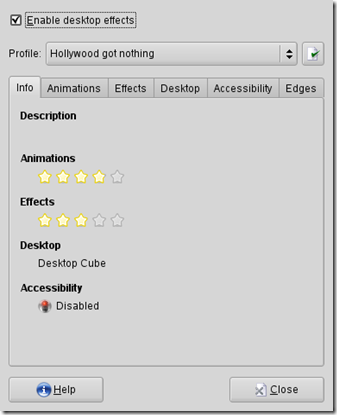

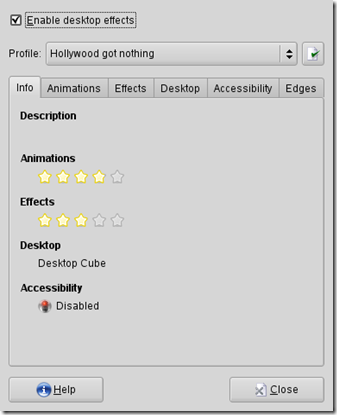

On openSUSE you’ll find this tool at: Start menu > Utilities > Desktop > Desktop Effects. On other distributions, search the Start menu for ‘Desktop Effects’. You’ll see a dialog box where the only control you can change is an Enable desktop effects checkbox. Check it. Now you can choose a pre-built configuration from the drop-down list. I’ve chosen Hollywood got nothing.

You will see some effects, but your desktop may become a little messy - window control buttons may not get drawn, and if you logout, desktop effects will get disabled again. So don’t stop here. Keep going.

Choose Compiz as a window manager

On KDE 3.x, open Personal Settings. (Search for it in the Start menu.) Navigate to KDE Components > Session Manager. Select Compiz from the Window Manager drop-down list.

Log out and log back in.

Note: If your task bar disappears, don’t panic. The task bar exists but for some reason it doesn’t get drawn. What fixes it is this procedure: right-click where the task bar is supposed to be and select Configure Panel from the context menu.Change task bar’s location on the screen and click Apply. Then put it back where you want it to be and click Apply again. Log out and log back in. If this didn’t work the first time, try it again without putting the task bar back to its place. You’ll do that separately.

Enable and configure Compiz Fusion effects

On openSUSE you’ll find this tool at: Start menu > Utilities > Desktop > CompizConfig Settings Manager. On other distributions, search the Start menu for ‘CompizConfig Settings Manager’. You will see many icons with checkboxes next to them. You enable an effect through the checkbox and configure it through clicking on the icon. For additional help, use the CCSM page on the Compiz wiki.

The important step here is to navigate to Preferences and to set Backend and Integration to Flat-File. Logout and log back in after setting this.

Note: Some Compiz Fusion effects may conflict with base Compiz effects. In those cases a message pops up to warn you. I haven’t tried to override base Compiz effects with Compiz Fusion effects. Feel free to try it at your own risk.

Step 2: Make Your Desktop Look Like a Mac

At this point you should have a desktop with Compiz effects and the windows should be decorated according to your existing KDE/GNOME theme. (Actually, my windows weren’t properly decorated.)

Install Emerald

Just like you installed Compiz packages, search your software management tool for ‘emerald’ and install anything that seems to be either runtime or themes. Skip ‘…-develop’ packages.

Enable Emerald

Open CompizConfig Settings Manager. Click on the Window Decoration plug-in. In the Command box, enter ‘emerald --replace’. Logout and log back in. Now you should have a default Emerald theme enabled and all windows should be properly decorated.

Download Emerald themes

Go to http://kdelook.org/ and search for ‘OS X’. Alternatively, you may search http://gnome-look.org/ or http://compiz-themes.org/. (I believe those three sites share the same repository.) I have downloaded these two themes:

Feel fee to download and try more themes. Emerald theme files are gzip archives with a .emerald extension.

Manage Emerald themes

On openSUSE you’ll find this tool at: Start menu > Utilities > Desktop > Emerald Theme Manager. On other distributions, search the Start menu for ‘Emerald Theme Manager’. Click on Import and select a downloaded theme file. The new theme should show up on the list immediately.

To select a theme, simply click on it on the list and it will take effect immediately. I’ve chosen Mac OS X Ubuntu Air. When you are done, click on Quit.

Conclusion

There are still components of your desktop that Emerald doesn’t touch – buttons, icons, scroll bars, splash screen, etc. Keep searching http://kdelook.org/, http://gnome-look.org/, and http://compiz-themes.org/ for KDE/GNOME/Compiz themes that make those components look better.